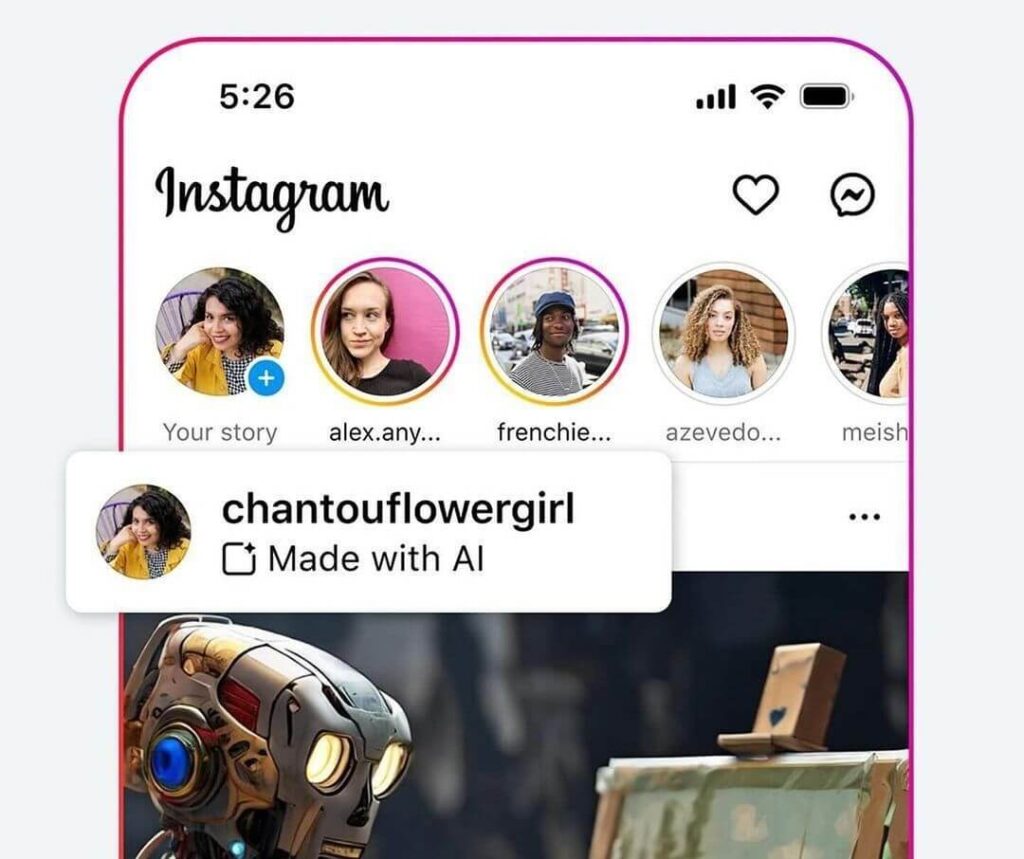

Made with AI – Hitting Meta Soon

So, get this.

Meta is introducing these new “Made with AI” labels for content that’s created using artificial intelligence.

The idea is to be more transparent and help users differentiate between content made by humans and posts generated by AI. It’ll apply to all kinds of content – feed posts, stories, videos, audio, images – you get the picture.

Now, content creators will be responsible for disclosing when they use AI tools to generate their stuff, however, Meta knows the importance of ‘trust but verify’. They plan to detect AI-generated content on their own, using things like invisible watermarks and metadata.

These labels will apply to content created using Meta’s own AI systems and third-party AI tools from what we know & they’re planning a gradual rollout, starting with a small group of users in May while expanding over time.

It’s all part of Meta’s efforts to tackle the challenges that come with AI-generated content and combat the spread of misinformation on their platforms. In cases where AI-generated content could seriously mislead the public about important matters, Meta might provide additional context alongside the labels. Think community notes on X, for example.

We’ll have to see how effective these labels are and how users respond to this new feature, but it’s definitely a step in the right direction.

I, for one, am excited to see this on just about everything I post from here on out 😎

Tweets that matter

Google continues to shake everything up – evolving their core business model which had generally gone untouched – now at a lightning fast (and reckless) rate.

I’ve been in SEO now for over 15 years and it’s never been a dull moment.

The recent changes though from Core updates, to the Helpful Content Update have all come wave, after wave, after wave – and are decimating sites left and right.

The question now comes down to how will we evolve in search.

- Sites like Google have considered themselves ‘Search Engines’ for years.

- AI tools like Perplexity 🔗 consider themselves ‘Answer Engines’ now in this new wave of AI tech.

So in the coming months, and even years, how will you continue to find your answers?

Around the web

🔈 Suno.ai is the wildest AI startup on the web right now

🎥 This AI commercial is hilarious

🔍 Search Three LLMs at Once with this creative setup

🤫 Whisper Web transcribes in the browser

Apple Reveals ReALM

Apple is finally telling us more about their AI efforts.

Their researchers have just unveiled a new language model called ReALM, and they’re claiming it can outperform OpenAI’s GPT-4 in certain tasks (which quickly is becoming an underwhelming statement).

Now, I know you’re probably thinking, “What makes ReALM so special?” Well, according to the research paper published just a few days ago, ReALM takes a novel approach to enhancing AI’s ability to understand and resolve contextual references. In other words, it can better comprehend things like on-screen elements, conversational topics, and background information.

This is a big deal because it’s crucial for virtual assistants like Apple’s Siri to provide more relevant and helpful responses based on the user’s current context. Consider Siri being able to understand not just what you’re saying, but also what’s happening on your screen or in the background, that would make it really helpful.

We haven’t seen the full extent of ReALM’s capabilities yet, but the initial claims of outperforming GPT-4 have definitely generated a buzz within the AI community. Yet while large language models like GPT-4 have shown incredible power in various tasks, their use in this ‘reference’ form has been somewhat underutilized to date.

While we’ll have to wait and see how ReALM performs in the real world, it’s definitely an exciting development in the field of AI. It could potentially lead to more advanced and context-aware virtual assistants and other AI applications in the future.