People make up stuff on the internet all the time.

People’s content is what AI is trained on.

People train AI.

We now ask AI to create content.

Still think AI isn’t capable of making anything up?

🙃

We know that everyone online today should have some basic level of internet and social media literacy.

But now, we need AI literacy too.

And it’s taken us a minute to catch on.

Not because we are naïve, but because AI sounds so confident and has access to so much information, it’s easy for us to believe it.

Let’s cut to the chase – AI makes things up.

This is what we call an AI hallucination. And if you’re using AI in your business, you need to understand what they are and how to prevent them.

🧠 The Most Dangerous AI Mistake

Large language models predict the next word; they don’t verify truth. When those predictions stray, you get “hallucinations.”

Key Facts

- 🛡️ Prevention — Retrieval, cross-checks, and “uncertain” responses cut errors without slowing you down.

- 🧠 Text > Truth — Hallucinations appear because language models predict plausible text, not verified truth.

- 📄 Big Impact — Benchmarks show fabricated citations in massive swings (15–94%) of answers, even from premium tools.

Here’s the thing…

When AI hallucinates, it might invent dates that sound reasonable but never happened.

It might quote reports that don’t exist. Sometimes it makes logical leaps that seem to follow from the information you gave it, but actually go way beyond what the source material says.

Here’s what makes this tricky: the AI never sounds uncertain when it’s wrong.

It delivers fiction with the same confidence as fact.

That’s great when you want creative ideas but dangerous when you need accurate information.

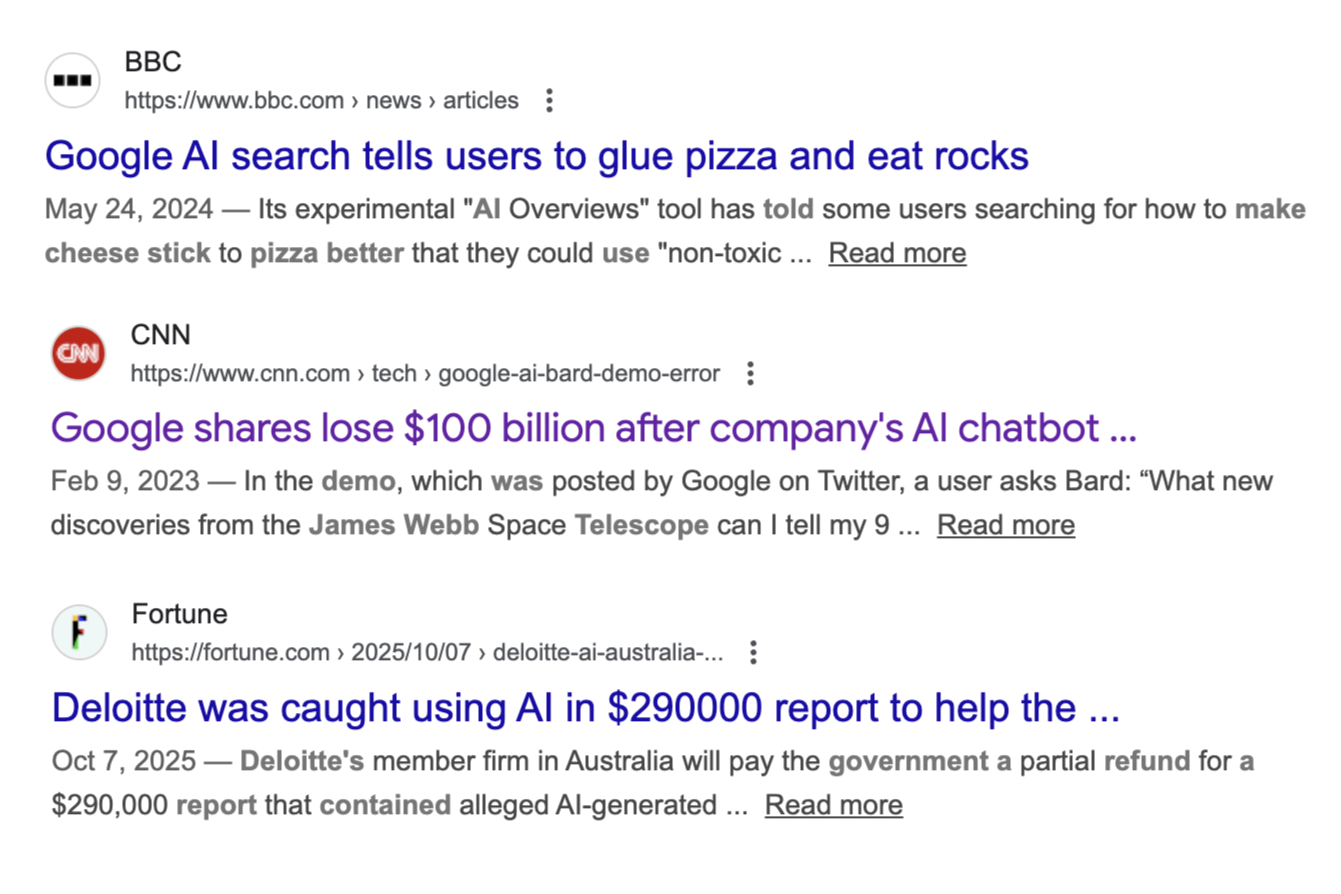

🚩 Real Companies, Real Consequences

This isn’t some abstract tech problem. Big companies have learned this lesson the hard way.

Remember when Alphabet did that big demo and their AI claimed the James Webb telescope discovered something it hadn’t? Their stock dropped so fast they lost $100B in market value in a single day.

Google’s AI told people to put glue on pizza and suggested eating rocks for minerals. These weren’t glitches.

The AI had found satirical content online and couldn’t tell it was a joke.

Deloitte had to refund hundreds of thousands of dollars to the Australian government after their report included footnotes and citations that simply didn’t exist.

These aren’t headlines you want to see about your company.

⬇️⬇️⬇️

Even medical transcription isn’t safe (no industry is).

OpenAI’s Whisper tool, which more than 30,000 medical workers use, sometimes adds words that were never spoken. Imagine the risk when those invented words end up in patient records.

🤔 Why This Keeps Happening

So why can’t tech companies just…fix this.

Hallucinations aren’t really bugs.

They’re more like side effects of how these systems work.

To break it down. ⏬

→ There’s a fundamental mismatch between what we want and what AI is built to do.

→ Models are trained to predict text that sounds right, not to verify facts.

→ The internet is messy. AI models train on massive amounts of web content that includes old information, conflicting claims, and straight-up wrong data.

→ The way we ask questions matters. If you prompt an AI to “give me five reasons” for something, it will give you five reasons even if only two good ones exist. The other three might be creative fiction.

→ When AI tries to reason through multiple steps, small errors compound. A tiny mistake in step one becomes a bigger mistake in step two, and by step five you have a confident conclusion built on a foundation of errors.

Check out the below graph I found showing a recent head-to-head evaluation of the top LLM’s. One more reason I love using Perplexity for my “answer” engine (and even use it to do the research I need for my content – including this newsletter)!

⚖️ How I Keep AI Honest in Real Projects

After years of building AI systems, I’ve developed strategies that actually work.

No fancy tricks.

Just practical approaches that reduce mistakes.

- Use RAG (Retrieval-Augmented Generation)

Instead of letting AI answer from its general training, I make it pull from specific documents I trust. When the model has to cite exactly where it found information, mistakes drop significantly. You can even track which parts of which documents it used for each answer.

- Get Second Opinions

For important decisions, I run questions through two or three different AI models. When their answers agree and match what’s in my database, I can trust the response. When they disagree, that’s my signal to dig deeper.

- Set Clear Boundaries

In sensitive areas like health, finance, or legal advice, I configure systems to block unsupported claims by default. Every factual statement needs evidence right next to it. No evidence means no statement.

- Make your calls “Strict”

Not everything needs to run through ChatGPT or Claude (the web tools that have default settings you can’t change). For important tasks, go right to the API calls and set your “temperature” to lower settings. This tells the model to reference more of it’s data before “making things up” to satisfy the request.

- Make “I Don’t Know” Acceptable

This one’s huge. I build systems that can comfortably say “I don’t have enough information” or “This needs human review.” When AI can admit uncertainty, it stops making things up just to have an answer. A natural language prompt to tell it just this typically is enough to cover most of your ground.

🤝 Prompts That Actually Help

Want to reduce hallucinations? Copy these prompts and adapt them for your needs:

For Grounded Answers:"You are a cautious research assistant. Using only the information in the context below, answer the question. If the context does not contain the answer, say: 'The context does not provide enough information to answer confidently.' After each factual statement, include the supporting quote in parentheses. Do not guess."

For Writing with Citations:"I'm drafting an article. First, list bullet points of factual claims you can support with a citation, each with a candidate source link. Then separately list interesting but speculative ideas, clearly labeled as speculative. Do not invent studies, URLs, or publication names; if unsure, say 'source unclear.'"

For Risk-Aware Analysis:"Answer in three sections: (1) Directly supported by evidence; (2) Plausible but uncertain; (3) Unknown or cannot answer. If you aren't highly confident something is documented, place it in section 2 or 3."

For Admitting Uncertainty:"If you are less than 80 percent confident or if sources conflict, respond: 'Uncertain, needs human review' and explain why with justification."

For Structured Output:"Return JSON with an array of claims. Each claim must include: text, confidence score from 0 to 1, whether it's supported by evidence (true or false), and exact evidence quotes. No outside knowledge."

Here’s a quick tip that might save you headaches: avoid prompts that say “be creative” or “sound authoritative” when you need facts. That kind of language pushes AI to produce something impressive even when the honest answer is “we don’t know.”

🚀 What’s Next

If this helped you think differently about using AI safely, forward it to someone else who’s building with these tools.

And if you want to discuss this stuff with other people facing the same challenges, come join us in CTRL + ALT + BUILD. We share setups, prompts, and real case studies every single week.

AI hallucinations aren’t going away anytime soon. But with the right approach, you can use these powerful tools while protecting your business from their creative fiction.

Give them a try, and let me know how it goes!

P.S. We’ve still got 30+ free lifetime accounts left for QuickSign

P.P.S. I have one VIP spot left for January too