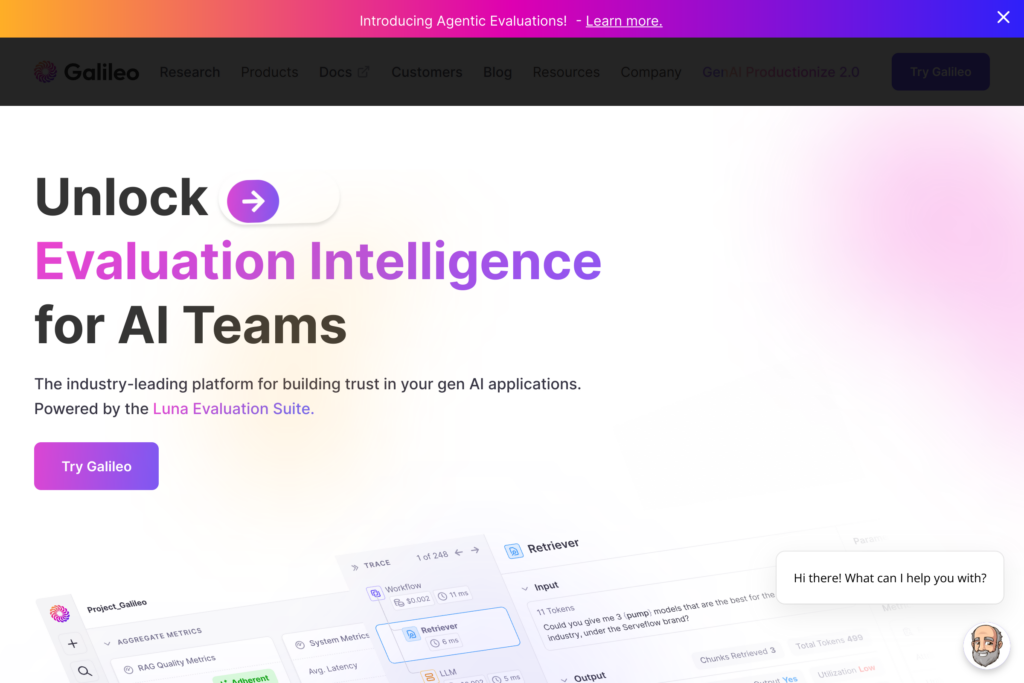

Galileo is an AI development platform that helps technical teams build and maintain better machine learning models, with a focus on large language models and text-based applications. The platform provides tools for data evaluation, model testing, and performance monitoring through features like step-by-step tracing, custom metrics tracking, and experiment management.

Teams from startups to enterprise companies use Galileo to improve their AI applications. The platform works with major providers like OpenAI and Hugging Face, offering prompt management tools that reduce errors and improve accuracy. For technical leads and ML engineers, Galileo provides detailed insights into model behavior and performance, while helping optimize costs and resource usage.

Whether you’re developing new AI applications or maintaining existing ones, Galileo offers comprehensive tools to evaluate data quality, test model performance, and monitor production systems. The platform helps identify and fix issues early, track experiments systematically, and maintain high performance standards – all while providing clear visibility into your AI systems’ operation and effectiveness.