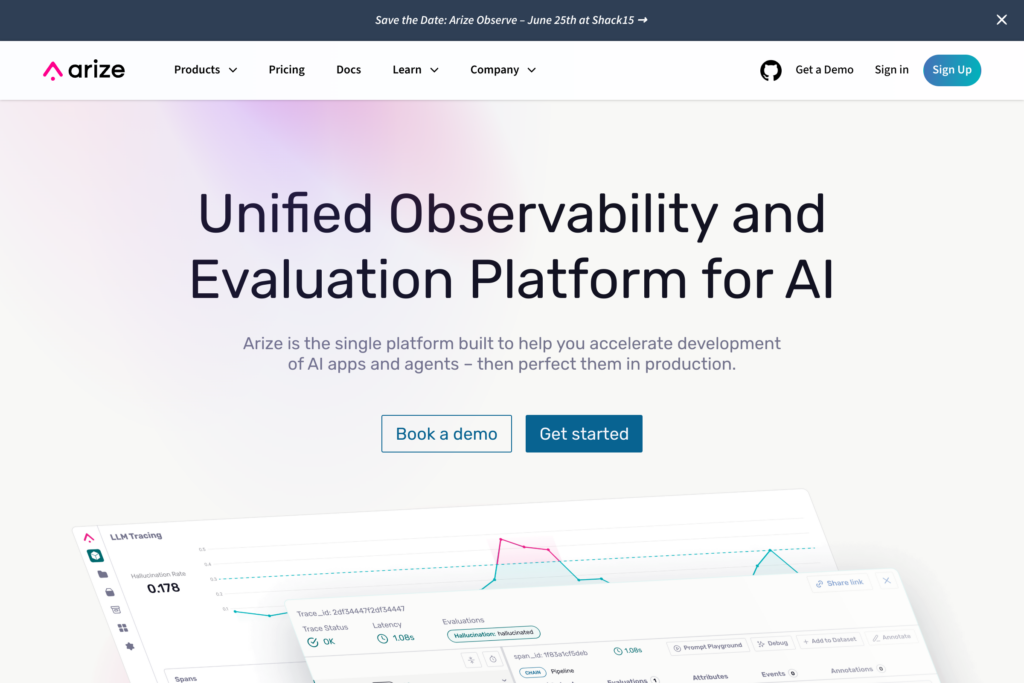

Arize AI is a monitoring and evaluation platform that helps AI teams track and improve their machine learning models in production. The platform automatically detects issues, analyzes root causes, and provides insights to enhance model performance across development and deployment phases. With features like real-time monitoring, customizable alerts, and specialized tools for large language models, teams can quickly identify and fix problems before they affect end users.

Technical teams use Arize AI to maintain visibility into their AI systems through centralized monitoring dashboards, performance tracing, and automated anomaly detection. The platform integrates with existing ML workflows and supports major AI frameworks, making it straightforward to implement comprehensive model oversight. Whether you’re running traditional ML models or working with newer technologies like LLMs and RAG systems, Arize AI provides the tools to evaluate, troubleshoot, and optimize your AI applications effectively.

The platform stands out for its practical approach to AI observability, combining automated monitoring with detailed evaluation capabilities. By connecting development and production environments, Arize AI helps teams maintain reliable AI systems while reducing the time needed to detect and resolve issues. This makes it particularly valuable for organizations that need to ensure their AI models perform consistently and accurately at scale.