01: Apple’s Slow AI March

In the ever-evolving world of AI, Apple is taking a different approach.

While other tech giants are racing to release their large language models (LLMs), Apple is moving at a more measured pace. They’re currently testing their AI-powered chatbot, internally called “Apple GPT.” Still, there are yet to be any solid plans for a public release.

Apple’s AI chatbot is built on its own LLM framework called Ajax, which runs on Google Cloud and is made with Google JAX, a framework created to accelerate machine learning research. Multiple teams are working on the project addressing potential privacy implications, but there’s not much more we’ve been told.

This slow and steady approach might seem curious in a fast-paced market. Still, it’s worth noting that Apple has always prioritized user privacy and quality over speed. They’re likely taking the time to ensure that their AI chatbot meets their high standards for privacy and functionality. This is in line with Tim Cook’s most recent interview answer in May after their earnings call when asked about their approach to the AI race: “I do think it’s very important to be deliberate and thoughtful in how you approach these things, and there’s a number of issues that need to be sorted”.

It’s also worth noting that Apple’s move into AI could signal a significant upgrade for Siri, Apple’s voice assistant. Siri pioneered the voice assistant space but has been criticized for falling behind its competitors in recent years. With the development of Apple GPT, we could see a vastly improved Siri.

🧠 Apple’s approach to AI development offers some valuable insights. In a market where speed often wins over everything else, Apple is prioritizing quality, privacy, and secrecy. This could be a winning strategy in the long run as consumers become more aware of and concerned about their digital privacy.

A notable thought: integrating AppleGPT with Apple’s Vision Pro could have us re-think the user experience entirely.

Imagine a world where your devices understand and respond to your commands more accurately and perceive and interpret visual data in real time. This could lead to more intuitive interfaces, intelligent home automation, and advanced augmented reality applications. It’s a tantalizing glimpse into the future of AI in our everyday lives.

Apple’s slow and steady approach to AI development is a reminder that not all innovation needs to happen at breakneck speed. Sometimes, getting things right can lead to a better product and a more sustainable business model, but it’s a considerable risk in this market.

02: Tailored Conversations with ChatGPT

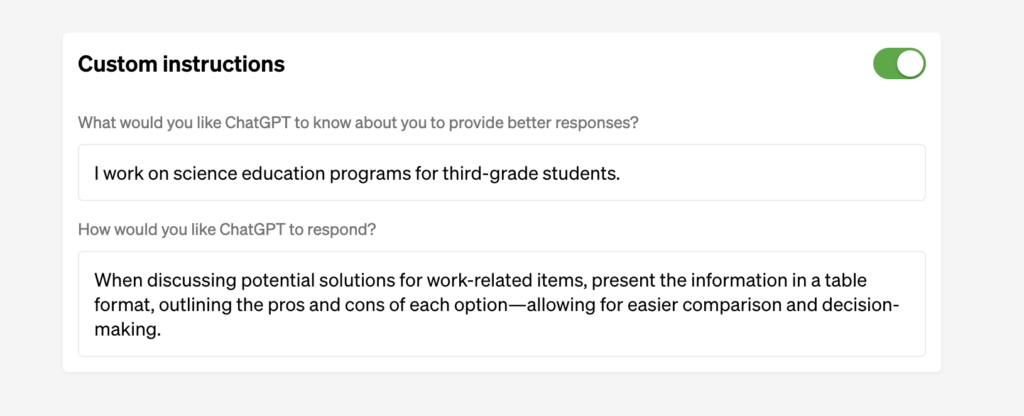

OpenAI has recently rolled out a new feature for ChatGPT – custom instructions. This feature allows users to provide high-level instructions that guide the model’s behavior throughout the conversation.

Here’s what you need to know:

- No more blank slates: Previously, every new message thread with ChatGPT started from a blank slate, meaning the model didn’t have any context from previous interactions. With custom instructions, you can now set the tone and context for the entire conversation.

- Easy to use: To use custom instructions, you pass things it should know & directions for the response. For example, you can instruct ChatGPT to know that you are a teacher for elementary school and to respond like Shakespeare. Or you’re a small business owner with a growing agency & to only respond with facts in bullet point form.

- Flexible and versatile: Custom instructions can be used to guide the model’s behavior in various ways. You can instruct the model to use a specific tone, adopt a particular role, or focus on a topic with faster workflows.

- Available for all: This feature is rolling out to Plus users and will be available for all users in the next few weeks!

🧠 Custom instructions may seem simple, but it’s abilities are powerful.

Whether you’re a developer looking to create more engaging chatbots or a business owner seeking to automate interactions faster, custom instructions can help you get more out of ChatGPT & it’s one step closer to a highly customized chat experience for you.

03: Llama v2 is Open Sourcing AI Chat

There’s a new llama in town.

Meta, formerly Facebook, has released Llama 2, a collection of pre-trained and fine-tuned large language models (LLMs) optimized for dialogue.

Meta’s Llama 2 models range in scale from 7 billion to 70 billion parameters & outperform open-source chat models on most benchmarks tested. According to Meta’s human evaluations for helpfulness and safety, Llama 2-Chat may be a suitable substitute for closed-source models we all know, use, and love.

Enter the reason I’m telling you about this today: Meta has made Llama 2 open-source and authorized its commercial use.

🧠 This opens a world of possibilities for businesses to leverage AI in new and innovative ways.

I’m reminded of when Facebook (before they were Meta, haha) changed the license for their React library 🔗 to the widely popular MIT license in 2017. Because of that decision, the adoption rate and innovation seen in the past five years have been phenomenal. Notably, Reach is now used in over 16 million other codebases.

This is like giving a kid a candy store key and saying, “Go wild!” But possibly a Dentist’s kid, and the candy being healthier. You get the idea.

Meta’s decision to open-source Llama 2 and authorize its commercial use is a significant step towards democratizing AI. It allows businesses of all sizes to leverage advanced AI technologies previously only available to large corporations with proprietary technologies.

In a nutshell, Llama 2 is not just another AI model. It’s a powerful tool businesses can leverage to improve their services, streamline operations, and drive innovation. And with Meta’s decision to open-source Llama 2, the sky’s the limit for what businesses can achieve with AI regarding dialog-based chat.