01: BeFake is Beyond Reality

We’re constantly interrupted and reminded about the world’s ‘highlight reels’ on social media.

It’s a social norm where authenticity and realness are often suggested but rarely accurate. Not long ago, BeReal took the stage to connect with friends daily and get a “real” glimpse of lives – with no filters and no easy way to ‘fake’ the experience. A new platform is flipping the script.

Enter “BeFake,” a pure rip on BeReal in social networking that encourages users to embrace the imaginative side of AI.

Founded by Kristen Garcia Dumont, the former CEO of Machine Zone, BeFake offers a space where users can craft fantasy versions of themselves using AI-generated images. Instead of the usual selfie, imagine a version of yourself as a medieval knight, a futuristic astronaut, or even a mythical creature. The possibilities are as vast as imagination gives you and only require you to type a prompt to create a unique AI avatar.

Here’s what sets BeFake apart:

- Beyond Selfies: The app isn’t just about uploading photos. Users can submit text prompts, and the AI will generate visuals based on those prompts. Want to see yourself as a rockstar performing at a sold-out concert? Just type it in as you take a photo on your couch.

- Authenticity in Fakeness: While the name might suggest otherwise, CEO Kristen Garcia Dumont believes BeFake offers a more authentic form of self-expression. In a world with immense pressure to present the “perfect” image, BeFake provides a space where users can be whimsical or serious without judgment.

- Community Engagement: The app isn’t just about creating; it’s about sharing and engaging. The most imaginative faux identities gain popularity within the BeFake community. Users can share images from prompts, react to their favorites, and start trends based on the most creative AI-generated visuals.

Ironically enough, the app encourages authentic creativity but is known for being fake.

🧠 At its core, BeFake challenges the traditional social media norms. It’s a reminder that in the digital age, self-expression isn’t just about showcasing reality. With AI’s power, we’re not just passive consumers of content but active creators, shaping our digital identities in ways previously thought impossible.

Snap and Meta have been offering features similar to this for some time, where you can post an AI-based filter of your content. This is the first time the AI filter is your content.

Join me at the AI Virtual Conference. I’ll be speaking at this completely free event next week. Claim your free ticket here.

02: Brainwaves to Soundwaves

Music and the brain have always shared a profound connection, or at least mine has with a mix of European house music & 90’s Hip-Hop.

But what if we could delve deeper into this relationship, tapping into the very essence of musical perception within our neural circuits? A groundbreaking study led by neuroscientists at the University of California, Berkeley, has done just that, and the results are nothing short of incredible.

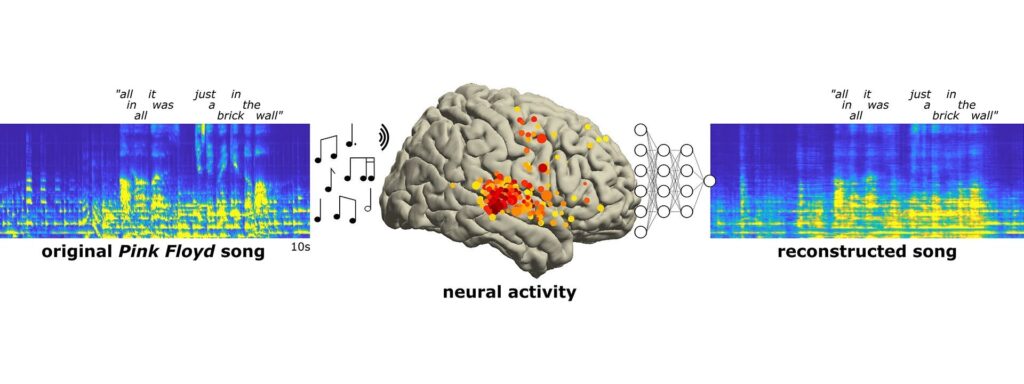

As patients at Albany Medical Center listened to the iconic chords of Pink Floyd’s “Another Brick in the Wall, Part 1,” researchers meticulously recorded the brain’s electrical activity. Their objective was ambitious: to capture the brain’s response to various musical attributes – tone, rhythm, harmony, and lyrics – and see if they could reconstruct the song based solely on these recordings.

After analyzing data from 29 patients, the UC Berkeley team, including Ludovic Bellier and Robert Knight, achieved a remarkable feat. They successfully reconstructed a recognizable segment of the song from brain recordings. The phrase “All in all it was just a brick in the wall” was discernible, with its rhythms intact, albeit with slightly muddled words.

🧠 This pioneering research showcases the potential of intracranial electroencephalography (iEEG) in capturing the musical elements of speech, known as prosody. These elements, including rhythm, stress, and intonation, convey meaning beyond words. While the technology isn’t ready to eavesdrop on our internal playlists, it holds promise for individuals with communication challenges. By capturing the musicality of speech, future brain-machine interfaces could offer a more natural and expressive form of communication, moving away from the robotic outputs of today.

The study also revealed intriguing insights into the brain’s auditory processing. For instance, certain areas of the auditory cortex responded to the onset of vocals. In contrast, others were attuned to sustained lyrics. The research also confirmed that the right side of the brain has a stronger affinity for music than the left.

As AI and neuroscience intertwine, studies like this pave the way for a deeper understanding of the human brain and its intricate relationship with music. Whether you’re a fan of Pink Floyd or just fascinated by the wonders of the brain, this research strikes a chord (you’re welcome), reminding us of the possibilities at the intersection of music, technology, and neuroscience.

Give these audio samples a listen. They’re pretty incredible:

03: AI Powered Hope

When it comes to medical technology, innovation often brings hope. This is especially true for Julie Lloyd, a 65-year-old stroke survivor from the UK, who is relearning to walk independently, thanks to a pair of ‘high-tech trousers’ powered by artificial intelligence.

Dubbed “NeuroSkin,” these trousers are not just any ordinary clothing. They are embedded with electrodes that stimulate the wearer’s paralyzed leg, all controlled by advanced AI algorithms. Julie is part of the UK’s inaugural trial for this “smart garment,” and her experience has been transformative. She describes the sensation as if her leg is being “guided,” allowing her to walk unaided for the first time in half a year.

The science behind it is fascinating. One side of the NeuroSkin monitors the electrical impulses of Julie’s healthy leg. The AI system then processes this data and recreates those impulses on her weakened leg, mirroring her natural stride. This approach is a significant advancement from traditional Electrical Muscle Stimulation (EMS) used in stroke care, often providing a more generalized “zapping” to reactivate weak muscles. NeuroSkin, on the other hand, offers a tailored experience, adapting in real-time to the wearer’s unique needs.

The company behind this innovation, Kurage, based in Lyon, envisions the NeuroSkin as a “second skin.” Rudi Gombauld, the CEO of Kurage, explains that the garment is embedded with sensors that can interpret how the brain functions, providing invaluable sensory information to the AI system. This continuous feedback loop allows for a more natural and effective rehabilitation process.

🧠 The introduction of NeuroSkin signifies a potential paradigm shift in stroke rehabilitation. As AI continues to merge with medical technology, the possibilities for patient recovery expand.

Innovations like NeuroSkin bring hope to the millions affected by strokes, offering a glimpse into a future where technology and human resilience work hand in hand.